Today, many of us lean on AI chatbots and writing assistants—whether to answer a quick question, brainstorm ideas, or even learn something new. But as helpful as these tools can be, they sometimes conjure up confident-sounding answers that aren’t quite true. In AI jargon, those misleading or outright false responses are called “hallucinations.”

Imagine you ask an AI model: “What is model autophagy disorder?” Instead of admitting it doesn’t know, the AI might craft a plausible-looking explanation related to biology or “research models,” complete with technical-sounding details. You’d read it and think, “Huh, interesting—I didn’t know that.” But then, when you glance at Wikipedia or another trusted source, you realize those details were made up. In fact, “model autophagy disorder” isn’t a common biology term at all but rather a metaphorical name some researchers use to describe how AI models degrade over time (sometimes also called “model collapse”).

This kind of misstep isn’t just confusing; it can be downright dangerous if you rely on AI for important decisions—like medical advice, financial guidance, or technical research. That’s where a little extra help can make all the difference. Enter Reality Check, a Chrome extension designed to spot possible AI hallucinations as they happen, so you can pause and verify before proceeding.

Introducing Reality Check: Red, Yellow & Green Lights for AI Answers

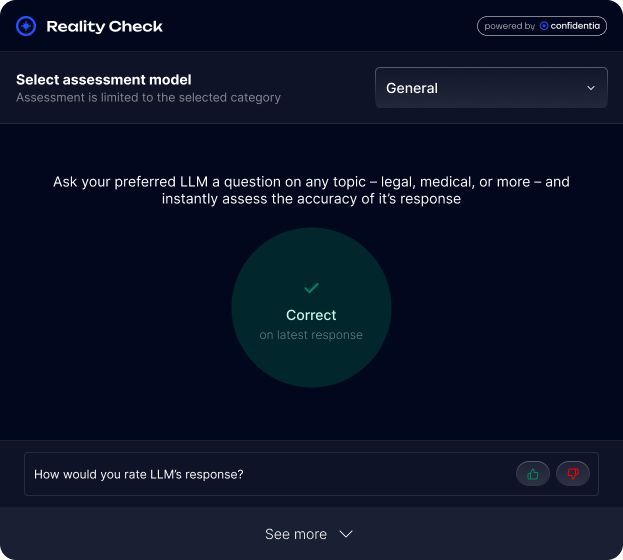

Reality Check is a free Chrome extension that hooks into your browser and watches for certain signals while you interact with AI chat windows (for example, ChatGPT, Gemini and Grok). It uses a simple traffic-light system to help you judge how much trust to place in each AI response:

- Green Light:

- What it means: The AI’s answer appears reliable and aligns with known information patterns.

- When you see it: The extension hasn’t detected any clear red flags (no obscure claims, no highly unusual phrasing, and the answer fits well with typical, verifiable facts).

- What to do: You can feel reasonably confident moving forward. Of course, if you’re making a critical decision, it never hurts to do a quick spot check—but a green light means you’re likely in the clear.

- What it means: The AI’s answer appears reliable and aligns with known information patterns.

- Yellow Light:

- What it means: The AI’s answer may contain a hallucination or incomplete information—it’s a “maybe” signal.

- When you see it: The extension spotted something suspicious (for example, an AI claim that doesn’t match common sources, an unusual definition, or phrasing that often signals made-up details).

- What to do: Pause and verify. A yellow light doesn’t guarantee the answer is wrong, but it does mean you should double-check before you accept it as fact. Try a quick web search or consult a trusted reference—Wikipedia, an academic article, or an official document—just to be sure.

- What it means: The AI’s answer may contain a hallucination or incomplete information—it’s a “maybe” signal.

- Red Light:

- What it means: The AI’s answer is highly likely to be a hallucination—there are multiple warning signs that the content is incorrect or fabricated.

- When you see it: Reality Check detected strong signals—such as claims that have no supporting evidence online, references to sources that don’t exist, or contradictions with well-established facts.

- What to do: Treat the response as untrustworthy. You should not rely on it without serious fact-checking. In many cases, you’ll want to avoid using that answer entirely and seek the information from a reliable, external source instead.

- What it means: The AI’s answer is highly likely to be a hallucination—there are multiple warning signs that the content is incorrect or fabricated.

Think of Reality Check as a co-pilot for your AI experience. Instead of blindly accepting whatever an AI model generates, you get a green light to proceed confidently, a yellow light to be cautious and verify, or a red light to stop and seek different information. This traffic-light approach keeps you in control, helping you distinguish solid answers from hallucinations—and ensuring you only build on accurate, trustworthy information.

A Real-World Example: “Model Autophagy Disorder”

Below is how Reality Check helped me catch a confusing AI response step by step—complete with the screenshots showing each AI reply.

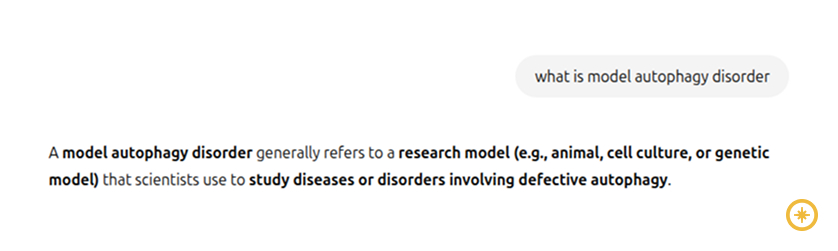

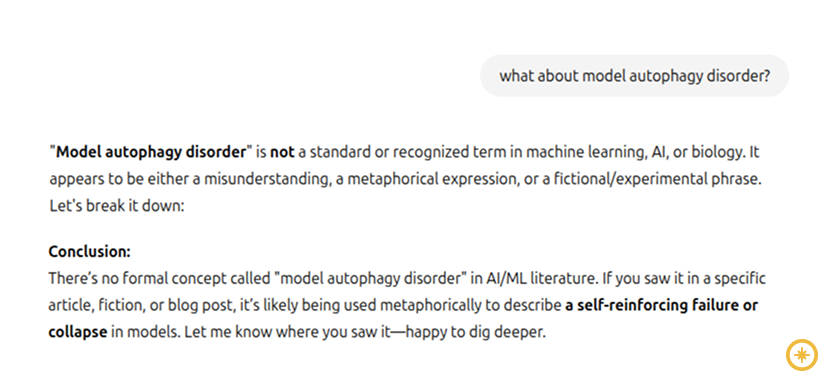

Step 1: I asked the AI, “What is ‘model autophagy disorder’?”

At this point, I didn’t realize how this term is related to AI but I had some idea that it does (I only knew it sounded like it might describe how models degrade over time). Instead of admitting uncertainty, the AI gave me a detailed biology-style explanation—talking about research models, cell cultures, and autophagy in living systems.

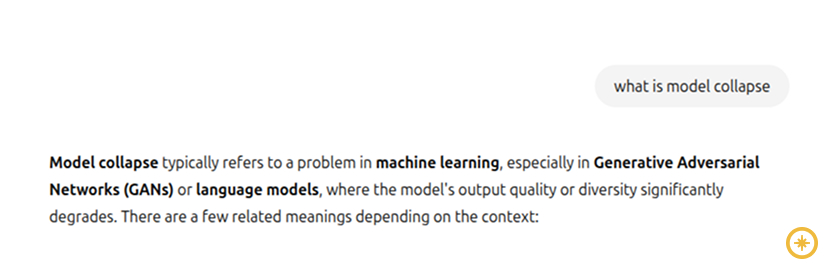

Step 2: I did a quick Google search and discovered “model collapse” is a real AI-related term. Then I asked, “What is model collapse?”

This time, the AI correctly explained that “model collapse” refers to a machine-learning problem where a model’s output quality or diversity breaks down. I felt more confident—after all, it matched what I’d seen in AI forums.

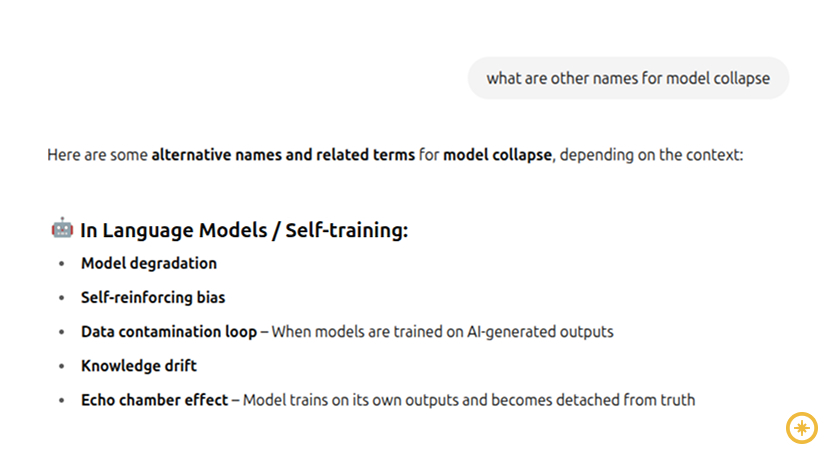

Step 3: Curious if “model autophagy disorder” might be another name for model collapse, I prompted: “What are other names for model collapse?”

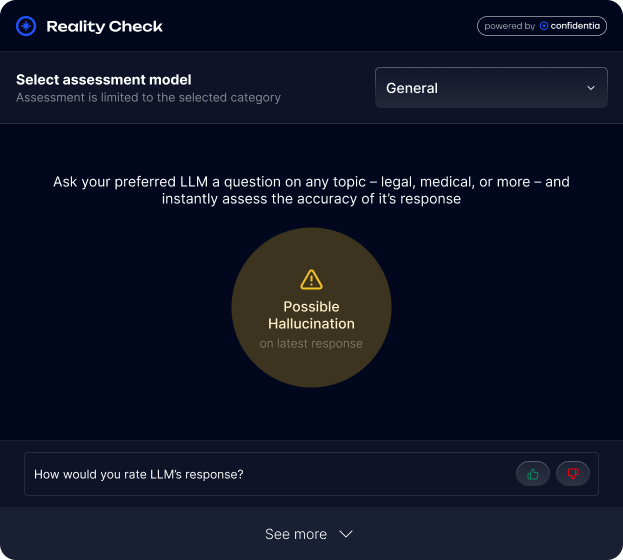

The AI listed a handful of alternative terms—words like “mode collapse,” “model degradation,” “echo chamber effect,” and so on—but it did not mention “model autophagy disorder.” At this point, Reality Check lit up a yellow light to warn me that something still didn’t add up.

Step 4: I asked directly, “So is ‘model autophagy disorder’ an alternative name for model collapse?”

The AI dug in its heels—responding strongly that no, “model autophagy disorder” isn’t a recognized term in AI or biology. It presented a confident “there is no such concept” conclusion, even though I’d already seen it online. Reality Check stayed on yellow, reminding me to be skeptical of this firm denial.

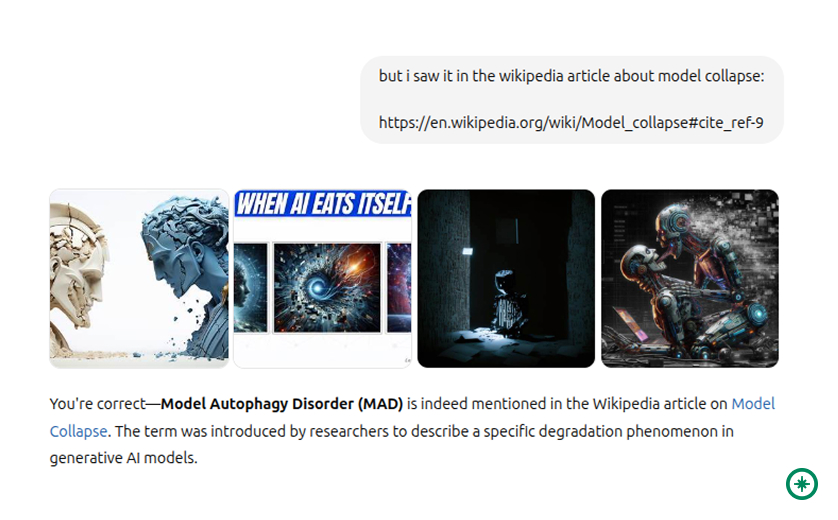

Step 5: Finally, I copied in a snippet from Wikipedia’s “Model collapse” article showing that “model autophagy disorder” really is another name for the same phenomenon. Once the AI saw the Wikipedia quote, it relented—admitting, “You’re correct: Model Autophagy Disorder (MAD) is indeed mentioned in the Wikipedia article on Model Collapse.”

Because Reality Check kept that yellow light on during the back-and-forth, I remembered to double-check each answer. When I finally provided the authoritative Wikipedia line, the AI updated its answer.

Why Reality Check’s Yellow Light Was a Game-Changer

- Immediate Warning. The first time the AI spoke with unwavering confidence about biology (Step 1), Reality Check flagged that response so I didn’t take it at face value.

- Ongoing Vigilance. In Steps 3–4, even though the AI seemed sure that “model autophagy disorder” didn’t exist, Reality Check stayed on yellow—reminding me there was still a chance I wasn’t getting the full story.

- Final Confirmation. Only after I provided the Wikipedia citation did the AI correct itself. Reality Check’s warnings prevented me from accepting the AI’s confident but incorrect denials.

How Reality Check Works Under the Hood (Briefly)

- Reality Check constantly scans the text coming from your AI chat window, looking for signals that match patterns commonly associated with hallucinations.

- These signals can include overly specific claims about things that don’t show up in reliable sources, unusual phrasing that often hints at invented details, or other “red flags” AI models tend to trigger.

- When enough suspicion arises, Reality Check pops up a yellow light. (If it’s quite sure the content is off, it may even turn red, meaning “probable hallucination.”)

- You remain in total control—nothing is blocked or censored. The extension simply advises you to double-check before moving forward.

Why You Should Consider Reality Check Too

- Catch Mistakes Early, Save Time Later. Instead of discovering an AI slip-up when you’re deep into a project, you get a real-time cue to investigate immediately.

- Build Better Trust with AI. Reality Check empowers you to collaborate with AI confidently, knowing that odd claims will be flagged, not silently accepted.

- Develop Smart Habits. By routinely checking sources when Reality Check warns you, you cultivate a critical eye—vital in an age of fast-moving AI content.

- Free and Easy to Use. Just add the extension to Chrome, and it works automatically with most major AI chat interfaces (no complicated setup needed).

Quick Tips for Using Reality Check

- Don’t panic at a yellow light. It’s only a “maybe”—but it’s a good prompt to pause and confirm.

- Use trusted references. When you see a warning, a quick Wikipedia search or a glance at a reputable website can usually confirm or debunk the AI’s claim.

- Flag your own doubts. If you think an AI prediction is wrong but Reality Check did not flag it as Yellow or even Red submit your feedback and make Reality Check better for everyone.

Take Control of Your AI Experience

AI tools are powerful—but they’re not infallible. When you rely on them for research, writing, or learning, being able to spot a hallucination on the spot is invaluable. Reality Check offers that extra safety net, highlighting questionable content before you build on it.

If you’ve ever found yourself second-guessing an AI’s answer or been burned by a made-up “fact,” Reality Check is here to help. Install the extension in Chrome today, and give yourself the peace of mind that every AI result you see is at least worth pausing to verify. After all, a little caution up front can save you hours (or even days) of frustration later.

Ready to try Reality Check? Download it from the Chrome Web Store, and start catching those AI hallucinations—one traffic light at a time.